AI as an opportunity for success

Artificial intelligence has moved from science fiction to your daily workflow. From email filters that catch spam to project management tools that suggest task priorities, AI quietly shapes how teams work together. Yet many professionals feel uncertain about what AI actually is and how to use it responsibly.

AI literacy has become as essential for modern teams as email skills were in the 1990s. Understanding AI basics helps teams work more effectively, make better decisions about which tools to adopt, and maintain security when handling sensitive information. This knowledge gap creates real challenges — from missed opportunities to improve workflows to serious risks around data privacy.

What is AI literacy?

AI literacy is the set of competencies that allows you to understand, evaluate, and use artificial intelligence effectively in your work. Think of it like learning to drive — you don't need to know how to build an engine, but you do need to understand the rules of the road, basic safety and how to operate the vehicle responsibly.

Unlike general digital literacy (knowing how to use computers and software), AI literacy focuses specifically on artificial intelligence tools. This includes understanding how AI works at a basic level, recognizing when AI is being used, and knowing how to evaluate whether AI-generated content is accurate and appropriate for your purposes.

The four pillars of AI literacy are:

Basic understanding: knowing what AI is and recognizing it in everyday tools

Critical evaluation: checking AI outputs for accuracy and bias

Ethical awareness: understanding privacy risks and ethical concerns

Practical skills: using AI tools effectively in your specific work context

Why AI literacy matters for teams

Remember when email first arrived in offices? Some people refused to use it, others sent everything via email (even when a quick conversation would work better) and many worried it would replace face-to-face communication entirely. The same pattern is happening with AI today.

Teams with strong AI literacy avoid these extremes. They know when AI helps and when human judgment works better. They can spot the difference between reliable AI suggestions and potential errors. Most importantly, they can have informed conversations about which AI tools to adopt and how to use them safely.

Consider this comparison:

Challenge

Without AI literacy

With AI literacy

New AI tool introduced

Fear and resistance or blind acceptance

Informed evaluation of benefits and risks

AI generates incorrect data

Team uses it without question

Team spots the error and verifies information

Client asks about AI use

Vague or defensive responses

Clear explanation of how AI supports (not replaces) human work

Data security concerns

Unaware of risks when using AI tools

Careful about what information to share with AI

Key AI literacy skills for everyday work

These practical skills will help you work confidently with AI tools in any role.

1. Understanding AI terminology

Let's decode the jargon you'll encounter:

Generative AI creates new content based on patterns it learned from existing data. When you ask ChatGPT to write an email or when design tools suggest layouts, that's generative AI at work.

Machine learning refers to AI systems that improve through experience. Your email's spam filter gets better at catching junk mail over time — that's machine learning in action.

Large language models (LLMs) are AI systems trained on massive amounts of text. They power chatbots and writing assistants by predicting what words should come next based on patterns they've learned.

Prompts are the instructions or questions you give to AI tools. Good prompts get better results, just like clear instructions help human colleagues understand what you need.

AI hallucinations happen when AI confidently presents false information as fact. The AI isn't lying — it's making its best guess based on patterns, but sometimes those guesses are wrong.

2. Safe and ethical usage

Using AI responsibly means thinking before you click. Ask yourself:

What data am I sharing with this AI tool?

Would I be comfortable if this information became public?

Am I checking the AI's work or accepting it blindly?

Could this AI output reflect unfair biases?

For example, never input client passwords, financial data or personal employee information into public AI tools. If you're using AI to help with hiring decisions, double-check that it's not showing bias against certain groups.

3. Critical evaluation of AI outputs

AI can produce impressive results, but it's not perfect. Here's how to check AI-generated content:

Verify facts: if AI claims something happened in 2019, look it up. AI often gets dates, names and specific details wrong.

Check completeness: AI might give you three reasons when there are actually five. It tends to simplify complex topics.

Look for bias: if AI suggestions seem to favor one group or perspective, dig deeper. AI learns from human-created data, which includes human biases.

Trust your expertise: you know your field better than AI does. If something seems off, it probably is.

AI literacy frameworks explained

Frameworks give you a structured way to build AI literacy across your team. Think of them as a curriculum for AI learning.

1. Foundational knowledge

Start with the basics everyone needs to know:

How does AI learn? AI identifies patterns in data. Show it thousands of cat photos, and it learns what "cat" looks like. Show it thousands of project plans, and it learns what makes projects succeed or fail.

What can't AI do? AI doesn't truly understand anything — it recognizes patterns. It can't replace human judgment, creativity or emotional intelligence. It also can't work with information it wasn't trained on.

2. Practical application

Moving from theory to practice means:

Choosing the right tool: Not every task needs AI. Use it for repetitive work, initial drafts or data analysis — not for final decisions or sensitive communications

Writing effective prompts: Be specific. Instead of "write an email," try "write a friendly follow-up email to a client about their project deadline next Friday"

Integrating AI into workflows: Start small. Use AI for one task, get comfortable and then expand

Documenting AI use: Keep track of when and how you use AI, especially for client work

3. Ethical and social awareness

The bigger picture includes understanding how AI affects your workplace and society. This means considering questions like: Will AI change team roles? How do we maintain transparency with clients? What happens to the human skills we're automating?

Responsible and ethical AI use

1. Data privacy and security

Your data is only as safe as the AI tool you're using. Before entering any information, know:

Where your data goes: free AI tools often use your inputs to train their systems

What the privacy policy says: look for clear statements about data use and storage

Whether it meets compliance standards: tools like MeisterTask maintain GDPR compliance and host data in the EU, making them suitable for organizations with strict privacy requirements

2. Bias and fairness

AI learns from human data, which means it can learn human prejudices. Watch for:

Selection bias: AI might favor certain types of candidates or solutions based on historical data

Language bias: AI might use gendered language or cultural assumptions

Confirmation bias: AI might reinforce what it thinks you want to hear

Combat bias by using diverse data sources, checking AI suggestions against your values and including different perspectives in decision-making.

3. Transparency and accountability

Being transparent about AI use builds trust:

Tell clients and colleagues when AI assists your work

Maintain human oversight for all important decisions

Create clear guidelines about acceptable AI use

Take responsibility for AI outputs — you're accountable for what you deliver, whether AI helped or not

Next steps for AI-literate teams

Ready to build AI literacy in your team? Start here:

Begin with low-stakes experiments. Use AI for meeting notes or first drafts — tasks where errors won't cause major problems. As comfort grows, expand to more complex uses.

Create team guidelines together. Discuss what feels appropriate and what doesn't. Document these decisions so everyone stays aligned.

Schedule regular check-ins about AI use. What's working? What isn't? How can you improve? Make AI literacy an ongoing conversation, not a one-time training.

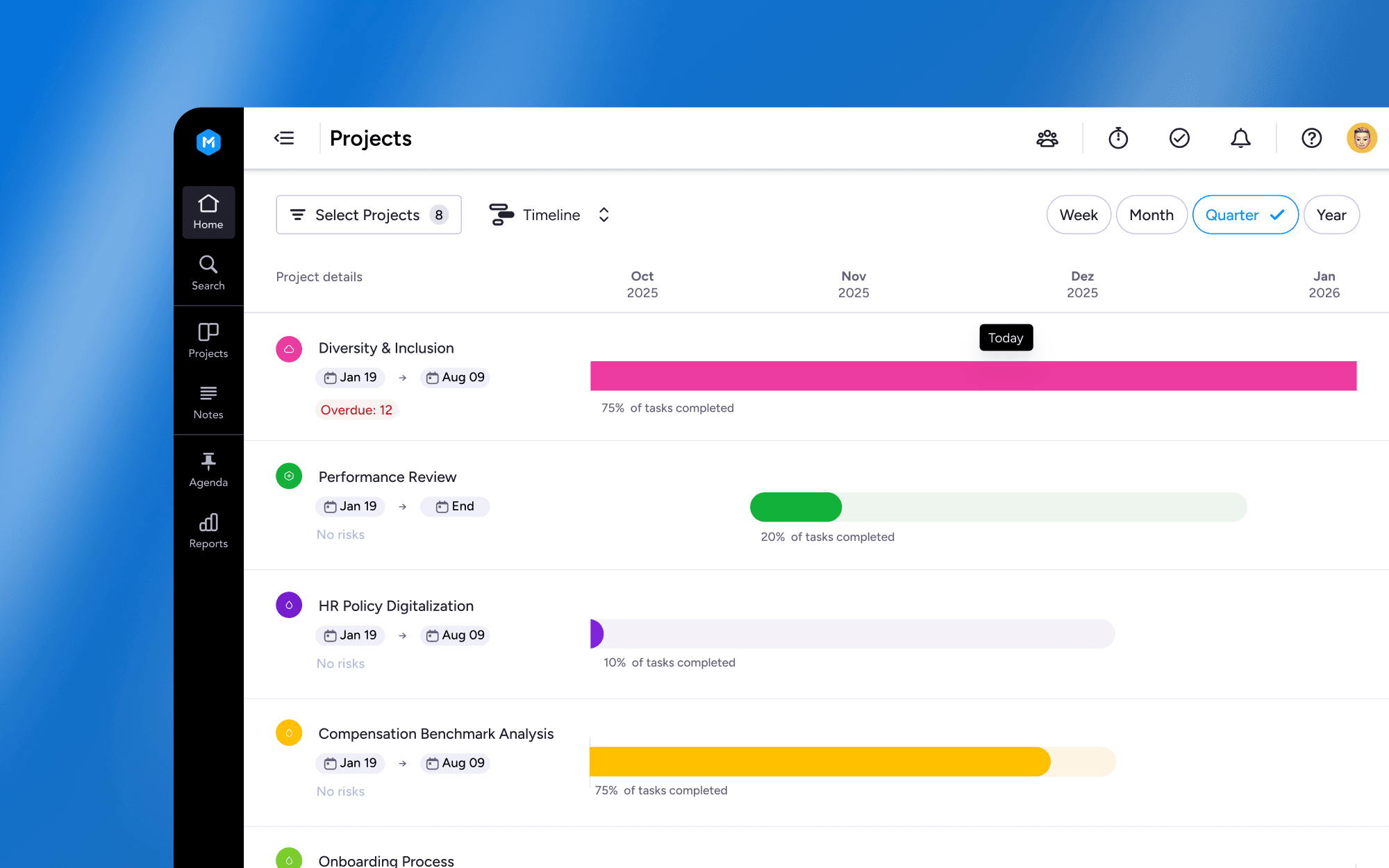

Choose tools that support your learning journey. MeisterTask's AI features provide a safe environment to practice AI literacy while maintaining the security and privacy standards your organization requires. With built-in AI assistance for task management and clear data protection policies, it's an ideal platform for teams taking their first steps with AI.